I am not sure if laziness is side effect of automation or automation was born out of laziness, either way it has helped transform IT by increasing efficiency and reducing errors while performing repetitive tasks. It removes human element of error, it increases speed of deployments and it frees up valuable time of IT personnel which can be utilized for more important tasks that need more human brain cells.

Ever since I came to know about SCVMM’s ability to deploy HyperV on bare metal hardware, I was eager to deploy it in my environment. Why should I spend hours to manually install the OS, install HyperV, configure networking and carry out other important but repetitive configuration tasks when SCVMM can do it for me in minutes!

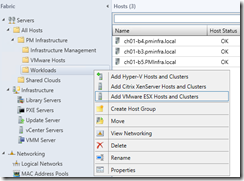

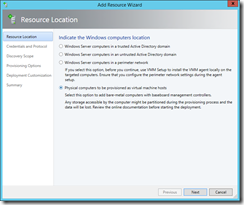

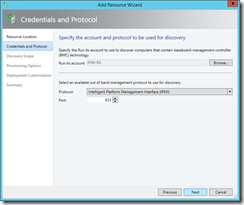

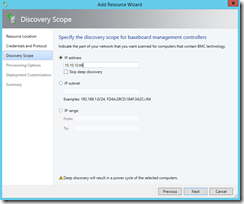

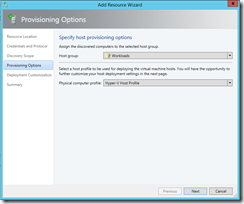

I started with one of my SuperMicro servers. All the prerequisite configuration on SCVMM was in place, hardware profile created, physical servers wired and IPMI configured. I then started “Add Resource” wizard selecting option to deploy HyperV on bare metal. I provided IP address of IPMI controller for the server, selected run as account for IPMI and got to the point where it started deep discovery of the server:

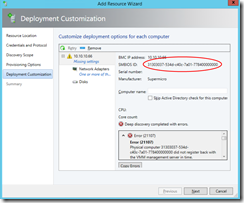

It powered on the physical server and I sat there waiting for it to download image needed for deep discovery and continue on to next steps. Instead, I noticed it taking long time on deep discovery and finally returned with “Error (21107)” which claimed “Physical computer GUID did not register back with the VMM management server in time”:

Notice the GUID in the error dialog, it is going to be handy later. This is the GUID returned by IPMI controller on the physical server we are trying to deploy.

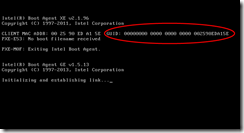

To find out why the server did not register with VMM, I turned to server console. Looking at the console, I was surprised to find out error “PXE-E53 No boot filename received”. I was expecting VMM to have provided boot image but it wasn’t the case here:

Note again the GUID reported here by the console when it is trying to boot from PXE. You might have noticed that the GUID reported by IPMI to “Add Resource” wizard and GUID reported at console by PXE is different. Maybe this mismatch is our issue? Maybe that’s why VMM refused to provide boot image to the server?

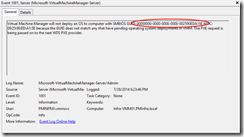

I didn’t know answer to this question until I looked into event logs. In event logs, under Microsoft-virtualMachineManager-Server/Admin I found event id 1001 claiming that the GUID does not match any pending deployments and VMM will pass on the PXE request to next WDS PXE provider:

Notice the GUID here. It matched what was shown on the console, not reported during discovery. VMM is expecting a PXE request from GUID reported during discovery and not from the one coming from PXE! Since my environment had purpose built WDS server, it did not have any boot images. If VMM did not serve PXE request, that PXE request had nowhere else to go, which understandably resulted in no bootfile received by console to boot from.

Now, I knew what my issue was and why the server was not deploying as expected. To get to the bottom of the issue, I tried great troubleshooting flow chart I came across on SCVMM blog. It seemed to me that everything per flowchart was expeted/correct. What I knew was that the server wasn’t sending expected GUID to VMM during PXE boot process. Having tried changing GUID of the server to no avail, I knew it would be an uphill battle if I tried to call SuperMicro and have them change the behavior. It would turn into discussion with SuperMicro product management team or developers as it was beyond scope of their support engineers. I did not have time or energy to waste on such an undertaking. I had bad experience with BIOS updates already.

It came down to figuring out if there was a way to override the wizard and tell VMM which GUID to expect from server so VMM can provide boot image and carry out deployment. Fixing any problem is maybe 10% of actual fixing and 90% of fact finding. You just keep turning the stones until you find that clue you desperately need. So I went looking again and found this great article from Hans Vredevoort. It talked about issue with HP iLO and I found striking similarities. And ofcourse I found my answer too!

The answer was to use PowerShell and issue cmdlets to start the deployment, specifying correct GUID and MAC address of network adapter performing PXE boot, both of which can be found in event log I mentioned earlier.

I also found a blog post on TechNet that went a bit deeper in explaining bare metal deployment using PowerShell.

Both methods are valid. Which method you should use depends on if you really want to use power of deep discovery to your advantage or you have server specifics and you can live without deep discovery. I knew exactly what my server configuration was and my scripts included in physical computer profile accounted for it. I am not going to repeat the cmdlets I used as they are similar to what Hans mentioned in his article.

In the end I was happy to see the server boot to the image provided by VMM:

and event log reflecting success:

End of the day, it was good feeling my search fu had won me another battle against SuperMicro. Bing: 2, SuperMicro: 0!

Onto deploying rest of bare metal and making it bit more useful. Cheers!

Thankyou very much for your help! A few things I experienced though was I had to inject the raid drivers into the WINPE image for the Raid partition to register (motherboard X10SRW-F). I’m up to the stage now where WinPE Boots but am having issues with the HDD format before applying the OS. I am getting the error “The selected disk is not a fixed mbr disk the active command can only be used on fixed mbr.” Any suggestions? Any help would be appreciated.