I wrote an article on LinkedIn titled “Your gateway is responding! What is network virtualization doing to your network?”. This article is technical follow-up to the precursor.

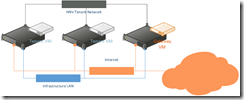

Not too long ago, I set out to create virtualized networks using Microsoft Hyper-V Network Virtualization (HNV) technology. I merrily went on with creation of required fabric and VM network components. I dedicated a host to HNV gateway function. I created Client VMs and a gateway VM. Here’s what the setup looks like:

The setup is pretty simple. Two tenant VMs connected to same virtualized VM Network. Gateway with two interfaces, located on dedicated host for HNV gateway functions. How to setup gateway VM running 2012 R2 is more involved and will be future post.

Now that VMs were created and gateway configured in fabric, I started standard network testing procedure. First step, an obvious PING!

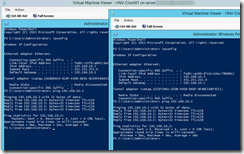

First I pinged from one tenant VM to another…

They were both on separate hosts and since they could ping each other, HNV was functioning as intended. Now was time to test gateway function. Instinctively, you would want to ping your gateway IP here as shown in client’s IP configuration. And that would come back pinging as well…

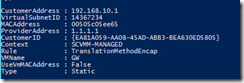

A lesson when troubleshooting HNV… Hyper-V will create a router interface on each host managed by SCVMM where given virtualized VM Network is assigned. You can see this by running “Get-NetVirtualizationLookupRecord” on Hyper-V Host. What you will see is a customer address which is “Gateway address” for tenant VM, and VMName “GW”. However, you will never actually see the “GW” VM on the host.

So the old way of thinking doesn’t work here. If you get ping response from “gateway” you are only getting response from Hyper-V host where VM is running. The host is responsible for handling NVGRE packets and pass it on to correct host where your actual gateway VM (as registered in fabric) is located.

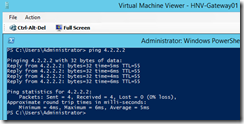

At this point though, the only way for me to verify if I have connectivity through gateway to remote destinations is to ping remote IP. I decided to ping one of the public DNS servers 4.2.2.2. The result wasn’t what I was looking for. Ping came back with “request timed out”. So I tried the same from the gateway VM. To no surprise, my gateway VM was able to ping public DNS IP without any issue:

Now I was getting into it real deep. As they say it, solution is quick, getting to find the solution is painful. It’s the process of getting to the root cause that takes most time. It took me days of troubleshooting, deleting and recreating gateway VM and many other things that I tried to no avail including a case I had open with Microsoft (which was non-decremented after I found the solution and relayed back my findings documented here to engineers I was working with). This is not to say Microsoft wasn’t capable, I can certainly say I didn’t have patience to deal with days of delay before I can get someone who understood the magnitude of the issue and troubleshoot the issue quickly and effectively without layers of escalation.

Anyway, so what did I do to troubleshoot the problem? I pulled out newest tool from Microsoft toolset… message analyzer! I did try network monitor but since it does not present NVGRE information the way message analyzer does, I found myself struggling. I needed something that can help someone like me who doesn’t breathe network captures for living. Message Analyzer was the answer to that problem. If you are up for adventure, feel free to use network monitor instead.

Before we talk network monitoring, let’s take a look at our IP addressing:

| IP Address | Machine Name | Notes |

| 192.168.10.2 | HNV-Client01 | Tenant VM connected to virtualized network |

| 192.168.10.1 | Gateway | This is not actual IP of HNV Gateway VM |

| 10.10.11.133 | VM Host | This is where HNV-Client01 VM is hosted |

| 10.10.11.132 | Gateway Host | This is where HNV-Gateway01 VM is hosted |

It is important to note that the IP address for host isn’t actual IP address assigned on the host’s management NIC. HNV sends NVGRE packets over “Provider Address”. Assuming SCVMM fabric is configured correctly, you can check your host’s provider IP address by running “Get-NetVirtualizationProviderAddress”. The table above reflects provider address of each host. VM addresses are actual.

Next, I setup message analyzer on host where the tenant VM was running and the host where gateway VM was running. I also setup message analyzer on both VMs so I have end to end visibility. Most importantly, I needed to make sure I can see NVGRE encapsulated packets and follow it to the point of failure.

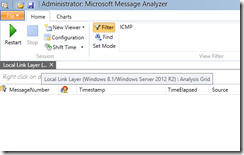

From start page of message analyzer, I selected “Local Link Layer (Windows 8.1/Windows Server 2012 R2)”:

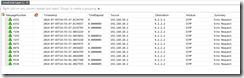

Once started on all 4 locations (2 VMs and 2 Hosts), I started ping from client machine to external IP address 4.2.2.2 and waited for expected timeout. Then I looked at message analyzer on client VM, expecting to see familiar result:

All I saw was four ICMP requests leaving the interface and not getting any response back. This wasn’t unexpected.

Next, I checked it from gateway VM. What I found here was surprising. I didn’t see any ICMP packets making to it!

What it meant is that there was some breakdown between client VM handing the packets over to the host and the host passing it to another host and then on to gateway VM using NVGRE encapsulation. This could mean many things so my natural next step was to take a look at message analyzer on Hyper-V host where client VM was running:

What was interesting to note here was, I saw 3 packets for each one on host (i.e. I was seeing 12 items for 4 ICMP requests on client VM):

So let’s dig in. The first request of each 3-pack looked quite ordinary. It contained source IP and destination IP, source and destination MAC addresses and other protocol details. What was interesting to note is the destination MAC address wasn’t of a NIC on gateway VM, but instead it was the MAC address we noticed earlier when we ran “Get-NetVirtualizationLookupRecord” on Hyper-V host where client VM was running!

So it was time to look at next packet:

Notice that now the packet actually contained correct MAC address that is assigned to NIC on HNG-Gateway01 (verified by ipconfig /all). However, the packet looks bit different from the one before. This packet is much bigger and contains GRE information. You will notice in the picture above that it also contains GRE information where source IP and destination IP are the Provider addresses we noted earlier! There is more to look into GRE packet if we wanted to, but for now let’s not worry about it. It is certainly great to see that this host intends to send packet using GRE to correct destination host. So let’s head on over to the host where gateway VM is running:

Here we see that the host where gateway VM is running did receive ICMP request and contains required information in GRE encapsulated packet. So everything from HNV perspective seems to be working so far. The question though is, if the packet made it to host, why did host not pass the packed on to the gateway VM?

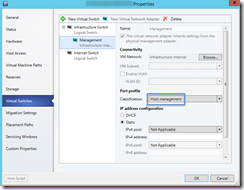

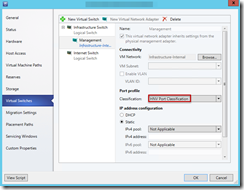

This is when I started looking at the fabric and host configuration. When looking at “Virtual Switches” of host property, something caught my eye:

I had assigned port classification that was “out of the box” classification. I vaguely remembered reading somewhere to create port profile for gateway but did not create it due to lack of understanding. It was obvious that I needed to create a port profile and assign it to the host where gateway VM is running.

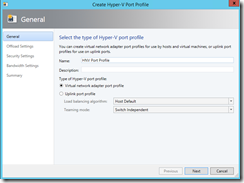

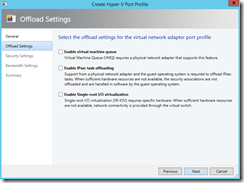

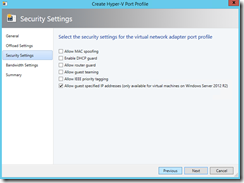

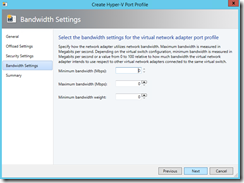

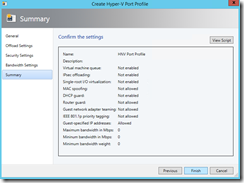

In SCVMM fabric, I first created port profile (I accepted defaults):

I think most important to note during this process was “Allow guest specified IP addresses…” which was not enabled in built-in “Host Management” port profile.

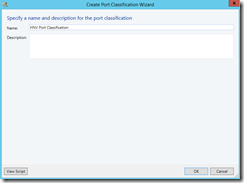

Next, I created port classification:

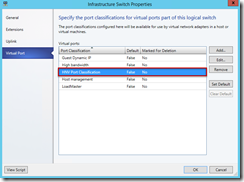

Next, I modified logical switch that was assigned to host adapter to include newly created port profile:

Last, I modified host properties to use new port classification:

After these changes, message analyzer on HNV-Gateway01 showed ICMP packets from tenant VM:

You don’t see individual request response in message analyzer as it combines them under one “operation”. if you were to expand it, you will see request and response.

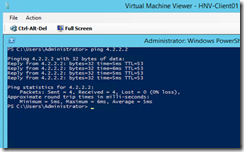

And finally, client VM was able to connect to external destinations:

You know you are a geek when you care the least about the hour of the day when you are solving puzzles. Onto next puzzle. Cheerios!

Excellent post, you helped me fix my issue similar to yours.